The TWS Metric: How to Measure Meaningful Experiences

Chapter Five: Principle 4—Value is in Time Spent

Dear Friends,

This is one of my favorite topics to write about: time value. It’s obvious that what you measure will be what you design for. Even while we are transitioning to a world of intelligent experiences, there’s still much that needs to be done to evaluate the experiences that customers have. And sometimes the best way improve the experience is to ask better questions. I hope you enjoy this post.

Please share this post with three people who need it!

The TWS Metric: How to Measure Meaningful Experiences

About six years ago, the members of the Experience Strategy Collaborative had had enough. At a summit we hosted, they talked. Their grievances were many and far ranging but the deeper we dug the more intertwined the topics became. The metric the company had chosen to deploy to measure customer service was being gamed by the employees (‘you have to give me a 10 or I’ll lose my job’). The company’s numbers stalled out after 18 months. The customers wouldn’t answer the post experience survey questions—even if the survey was only one question. But worse, when they did get people to answer that one special question determined by the company to be the key indicator of success, they couldn’t actually interpret the results. If the scores were higher, they didn’t know why. If the scores were lower, they had to run a follow up survey to try to surmise the problem.

Which goes exactly against the whole idea of a post-experience survey. You ask the question right after the experience so that you can fix the experience—or so the purveyors of the tool would have you believe. But the Collaborative members—who came from various industries—couldn’t take action based on a question that was vague. And worse, created in the 90s for a very, very different marketplace.

Perhaps, they wondered, the question was simply worn out. But since the entire CX industry was being held captive by one consulting company’s survey question, they feared they would lose their benchmarks, and therefore support from senior leadership to keep some form of measurement going.

Out of these discussions, grew a plan for a new, better way of measuring experiences. What I’m going to share with you has been vetted by thirty companies and piloted in dozens of situations. If you are the big consulting company who want everyone to use the same question, the size of our study will sound like peanuts. But if you are fed up with utterly predictable, yet frustratingly opaque output you get from said same big consulting company, lend me your ear.

As I mentioned in my previous post, the three key questions a company needs to answer in order to shift the focus on value creation toward meaningful experiences are:

1. Did the company get the job done for the customer?

2. Was the customer actually engaged with the experience?

3. Did the customer find value in the time spent in the experience?

We can use these three topic areas to help us build a metric that fits the specific experience we are trying to study. While there are numerous ways to gather data about these three questions, what the Collaborative members needed, and the CX movement is crying for, is a post-experience tool that will help them take action regarding a specific situation.

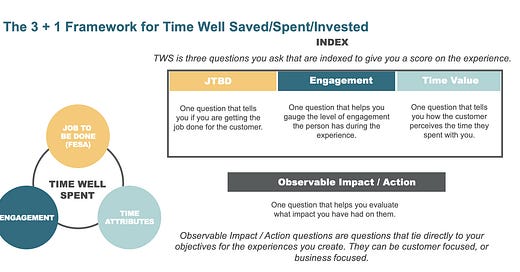

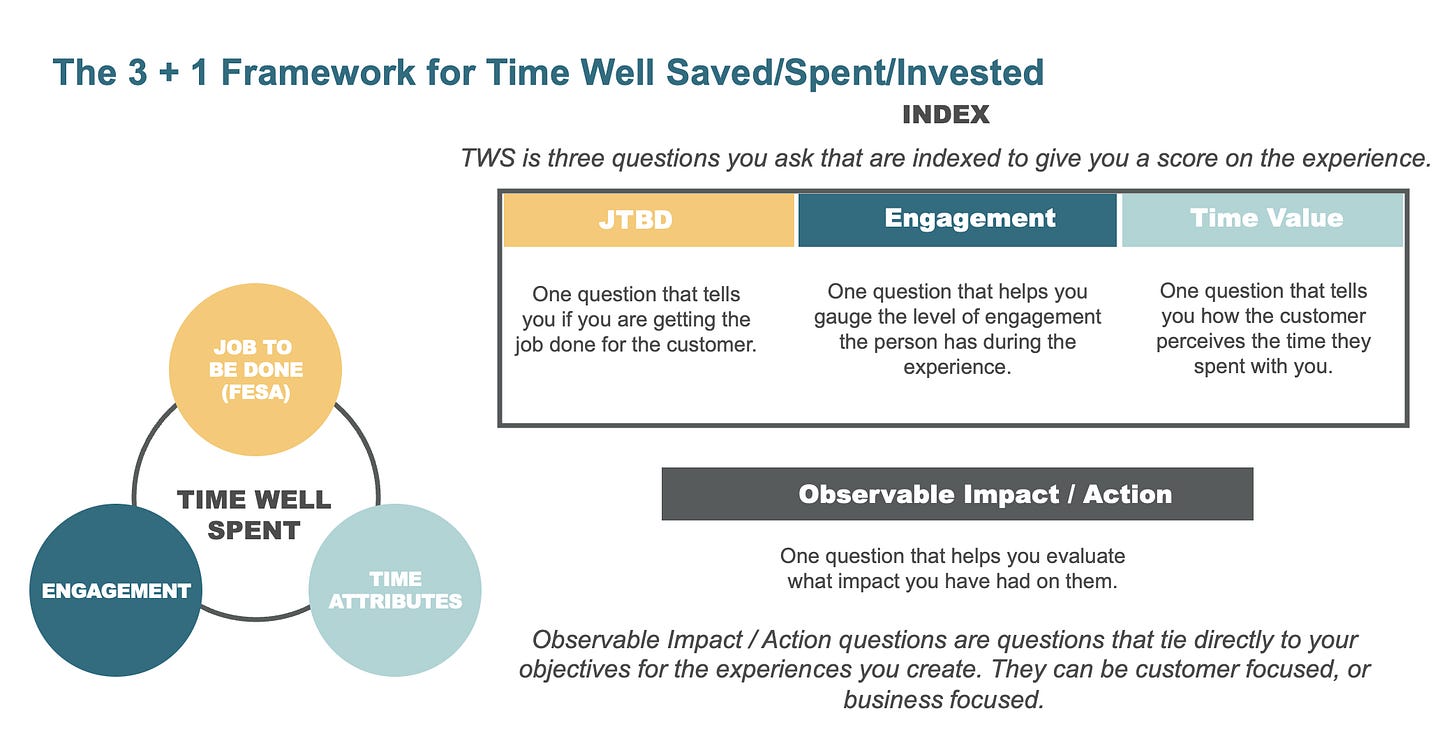

The 3 + 1 Framework

For post experience measurement, companies need one question that tells whether the company is getting the job done, one question that helps gauge the level of engagement, and one question that tells the company the customer’s perceived value of the time spent. In addition, many companies today want an impact question that is directly tied to business objectives. See the chart below.

From the first three questions, the company creates an index that provides a score for the experience. It is that score that the company tracks.

Why three index questions?

Each question focuses on a specific part of the experience that needs to be tracked. But you might be thinking you are never going to get customers to answer all three questions, let alone a fourth. The number of questions people will answer depends upon the channel, the amount of time the person spent, and the quality of the questions. For situations where only one question is appropriate, rotate the questions. This will do two things: because the questions change, people are more likely to think about their response—rather than just choosing a high or low score. Second, it forces the company to aggregate the date based on situational factors (time of day, high volume activity, etc.) rather than focus on ‘who’ segmentation. It keeps the company focused on their situational market.

Starting Point Questions

When we ask experience strategists why they deploy C-Sat or NPS, they say it’s because the question is simple to execute. They like the fact that the questions are general enough that they can be used across the entire business. They want to be able to compare their score to other companies. And they want to build a simple story for senior leadership. Of course, in order to activate against the score, they must do follow up surveys—so the mechanism isn’t nearly as simple as they want to believe.

Still, there is an advantage to having a broad-based question that can be applied across many situations. So we tested a variety of questions over several years. These are the questions that worked best.

Job to be Done

Did we help you accomplish what you hoped you would?

Engagement

How enjoyable was the experience for you?

Time Value

Was the experience worth the time you spent?

All three of these questions score better with customers than the C-Sat and NPS questions. Customers understand what is being asked and they are more likely to feel like the company cares about their response.

Okay, again: why three questions?

Now you might be thinking to yourself that one of these questions is the real, magic question. Perhaps it the time value question. After all, aren’t we trying to understand the value created from the time spent? Or maybe it’s the engagement question? Or the job to be done question? Each question is powerful—more powerful than what you are probably using right now. What makes them powerful is that they actually address a key issue that the company needs to stay focused on. The questions keep the company focused on engagement, JTBD, and time value.

When you share scores with teams that need to take action, you already have a head start on where the team should focus. And when it comes to experiences, the way to fix the issues almost always boils down to time value, engagement, or the job to be done.

When you ask about all three, you create a powerful index that can help you improve the value of the experience for the customer immediately and for the future.

To be continued …